The standards for the EU’s hotly-anticipated AI Act – specifically how they will be developed and what their role will be – rarely make the headlines. This is despite the fact that they will be crucial elements in making this fundamental piece of EU legislation a reality.

But even as trilogue negotiations are set to imminently begin, significant work remains to be done to ensure that the actual AI standardisation process does not end up contradicting the Act’s core objectives, notably around inclusion and transparency.

Indeed, most of the current discussions are centered around other important issues, starting with agreeing on a clear definition on what exactly is meant by the term AI … in the AI Act.

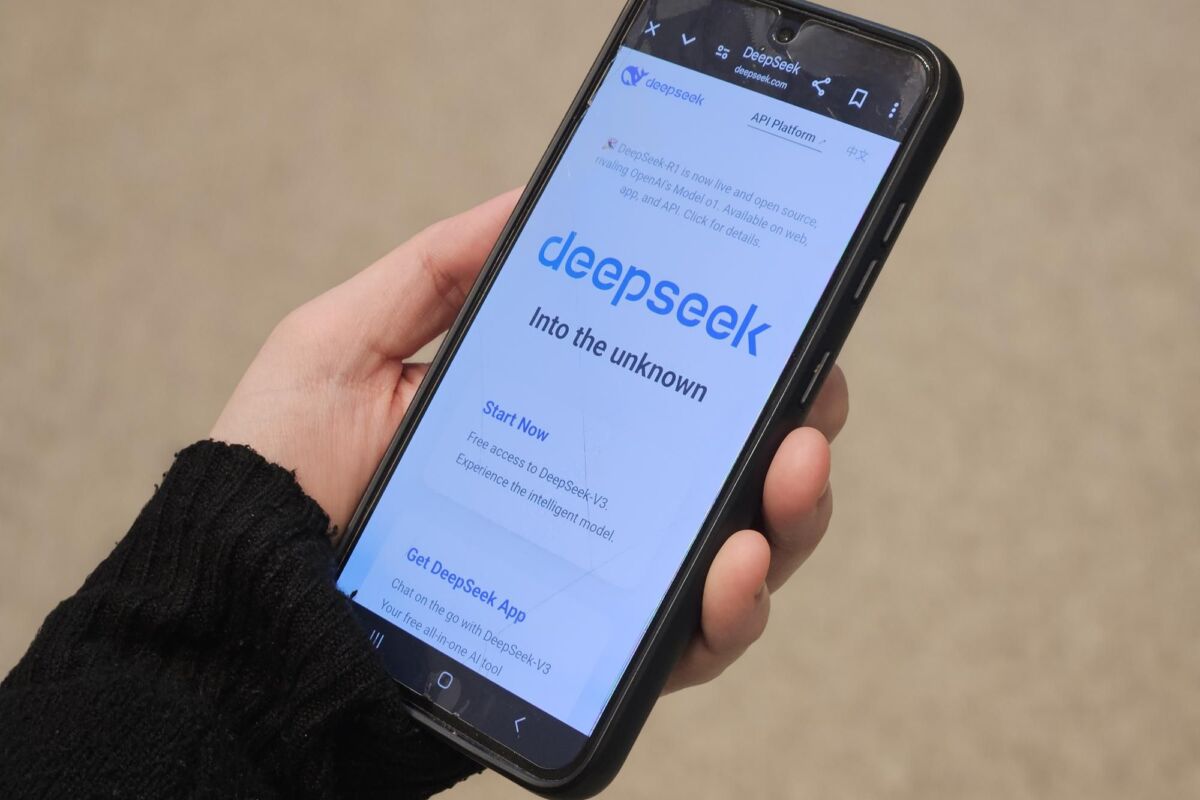

Intense negotiations are also at play considering the Council’s position supporting the use of AI systems for security purposes, concerns voiced in the European Parliament (EP) around the risks posed by specific applications such as emotion recognition systems, and the regulation of so-called general-purpose AI systems, which have recently captured our attention with the stunning (and somewhat worrying) success of ChatGPT and other generative AI systems.

But while the AI Act could become law as soon as 2023, to become fully operational, the rules contained in the Act would need to be supported by adequate standards, a process led by two crucial – yet mostly unknown – actors. These are the European Committee for Standardization (CEN) and the European Committee for Electrotechnical Standardization (CENELEC). Both are private international nonprofits, with 34 member countries and close connections with European stakeholders (‘European’ in the broad sense, by including the UK for instance).

Thanks to the work of CEN-CENELEC in developing European standards, AI developers will be able to meet the Act’s essential requirements, which will vary depending on the level of risks posed by their systems. Thus far, this important mechanism in the Commission’s proposal has remained largely untouched by the Council and EP and will probably remain an uncontroversial issue during the trilogues.

AI… as in Access and Inclusion

This does not mean however that the role of standard-setting organisations will not be controversial per se. Historically, technology standardisation processes are largely driven by industry and the private sector. By design, private companies have more decision-making power (national standardisation bodies are mostly composed of companies) and resources than other stakeholder groups, such as civil society organisations.

This unequal influence is further amplified by standard-setting organisations’ general working practices that tend to be secretive and relatively hostile to outsiders.

For example, CEN-CENELEC JTC 21, the joint technical group that will be tasked with developing the AI Act’s standards doesn’t publicly share information on who its members and experts are, nor does it provide a clear overview of its working process. This applies to the process, but also at times to the standards themselves. But it goes without saying, it’s vital that the emerging standards for the AI Act – such a crucial piece of legislation – are made publicly available.

Indeed, the EU has recognised access and inclusion in setting standards as a fundamental issue, as seen in the 2022 EU standardisation strategy. Despite improvements over the years, there is arguably still a need for more participation from civil society and consumer representatives.

This is particularly true for the AI Act, as technical standards are expected to define when and how AI systems will respect (among other things) fundamental rights. This is the reason why the Commission’s latest draft standardisation request mandates CEN-CENELEC to include human rights expertise and civil society participation in formulating AI standards. Still, some argue that even this is far from sufficient given the interests and issues at stake.

Such concerns have been fuelled by the recent sidelining of civil society groups from the drafting process of the Council of Europe’s new Convention on Artificial Intelligence, human rights, democracy and the rule of law. This was reportedly requested by the US (despite its observer status), and later approved by other delegations to secure American buy-in.

Regional or global AI standards?

There is a strong geopolitical element to current debates around AI standards. The US is indeed pushing the EU for more cooperation on standards, especially after the Commission undertook several initiatives designed to limit the influence of non-EU-based entities in standardisation processes.

This is well illustrated by the recent controversy around the European Telecommunications Standards Institute (ETSI), which is generally more open to individual foreign companies. In December 2022, the decision to exclude ETSI from the draft standardisation request for the AI Act was indeed seen as a way to diminish foreign companies’ influence.

Indirectly, this poses a question concerning the nature and scope of the standards to be developed for the AI Act. Though these standards are expected to have a global reach, they could very well accelerate the regionalisation of international standards. Some experts have suggested that there could be more alignment if the European standardisation process better leveraged existing international efforts, including the more advanced IEEE standards.

Another cause of fragmentation could be related to the AI Act’s actual enforcement. Indeed, the enforcement system envisioned in the proposal gives significant powers to national regulators, which could reach their own interpretations of the Act and its standards, pointing to another area where the Commission will eventually need to step in.

Tick tock, tick tock…

Standards will play an essential role in enforcing the EU’s AI legislation, and the clock is ticking.

If the AI Act is adopted in 2023, CEN-CENELEC will only have two years to formulate and consensually agree on a series of AI standards, to be then shared and adopted by all respective stakeholders.

In meeting this ambitious (and possibly unrealistic) deadline, both the EU institutions and CEN-CENELEC need to devise new forms of democratic accountability over standard-setting, for instance through more effective civil society participation.

This is to make sure that the resulting standards not only serve large industry interests but also properly address very real and legitimate fundamental rights concerns.

Time really is tight, and they need to get it right.